From Attic to Archive - A Guide to OCR Correction with Generative AI

Introduction

Years ago my uncle gave me a printed autobiography written by August Anton (1830-1911), my great-great-grandfather. It was an interesting 30-page document detailing his childhood in Germany, his involvement in the 1848 revolution, and his journey to America. I read it and shared it with my kids, as a link to a side of the family I did not know very well.

As the years passed, I started thinking about digitizing it since only a handful of copies existed, but I kept procrastinating. The document was a photocopy of a photocopy—generations of degradation had left it readable but far from pristine. When I started experimenting with GenAI models, I decided to use this manuscript as a practical test case. I used my iPhone—a “worst-case scenario"—to take intentionally mediocre photos and see how well I could do without anything specialized, not even a flatbed scanner.

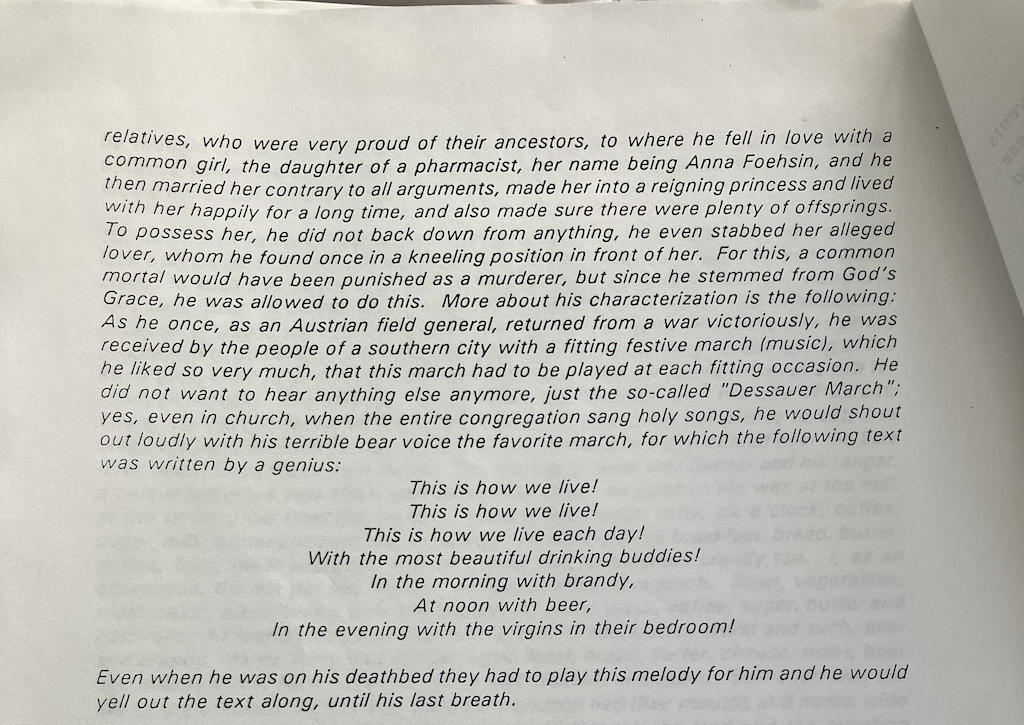

An example page showing the typical challenges: aged paper, faded ink, and photocopying artifacts.

What started as a personal project to preserve family history turned into a deep dive into production-ready OCR systems. In this article, I’ll walk you through what I built and what I learned:

- Intelligent Preprocessing - Optimizing aged document images for OCR accuracy

- Region-Based Extraction - Maintaining document structure and reading order

- AI-Powered Correction - Using GPT-5 to fix OCR errors while preserving meaning

- Performance Benchmarking - Measuring accuracy and understanding trade-offs

Whether you’re digitizing your own family archives or building document management systems, I hope my experience provides a useful foundation.

OCR Performance and Metrics

To measure the system’s performance, I used Character Error Rate (CER)—the standard metric for OCR quality:

CER = (substitutions + deletions + insertions) / total characters

A CER of 0.0 means a perfect match; 0.082 means 91.8% accuracy.

I also used Word Error Rate (WER) as a secondary metric. It’s calculated similarly but operates on words instead of characters:

WER = (word substitutions + word deletions + word insertions) / total words

Here’s what I measured for CER on 5 pages of the August Anton documents:

| Approach | CER | Processing Time |

|---|---|---|

| Pytesseract alone | 0.082 (91.8% accuracy) | 3.28s/page |

| Pytesseract + GPT-5 | 0.079* | 259.67s/page |

*The improved prompt was critical. My first GPT-5 attempt actually made things worse (CER >1.0) because the model over-edited the text.

Key insight: For clean printed text, Pytesseract alone delivers 91.8% accuracy—better than I expected. GPT-5 correction pushed it slightly higher while fixing the systematic errors that made the text harder to read. The real value wasn’t the marginal CER improvement, but fixing awkward errors like pipe characters being read as the letter “I” that made sentences nonsensical. These small fixes had an outsized impact on readability.

Preprocessing and Region-Based Extraction

When I started this project, I assumed the hard part would be the OCR itself. I was wrong—the hard part was preparing the images so the OCR could succeed. Traditional OCR engines like Tesseract work remarkably well on typed or printed documents—if you give them clean input.

The August Anton autobiography presented typical historical document challenges: aged paper with yellowing that confused color-based algorithms, faded ink from multiple generations of photocopying, scanner noise and iPhone camera artifacts, and occasional multi-column layouts that confused reading order.

The Preprocessing Pipeline

After researching and trying several approaches, I settled on a three-step pipeline:

def preprocess_image(img):

# 1. Convert to grayscale - eliminates color variation

gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

# 2. Median blur - removes noise while preserving edges

blurred = cv2.medianBlur(gray, 5)

# 3. Otsu's thresholding - automatically finds optimal threshold

_, thresh = cv2.threshold(blurred, 0, 255,

cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)

return thresh

Each step serves a specific purpose. Grayscale conversion eliminates the color variation from aged paper while preserving the text contrast that matters for OCR. Median blur removes salt-and-pepper noise from photocopying while preserving edges—unlike Gaussian blur, it won’t smear the text. I tested kernel sizes from 3 to 9 and found that 5 gave the best balance between noise removal and text preservation. And Otsu’s thresholding automatically finds the optimal threshold for binarization by analyzing the image histogram, so I didn’t have to manually tune it for each page.

Region-Based Text Extraction

My first attempt used whole-page OCR, which worked poorly—Tesseract would read text in the wrong order, especially on pages with titles or multi-column sections. The extracted text would jump around randomly, making it unreadable.

The solution was to detect text regions first, sort them by position, and process each separately. The approach uses morphological dilation to connect nearby characters into coherent regions, then sorts by Y-coordinate to preserve reading order. A simple vertical gap heuristic detects paragraph breaks—gaps larger than a threshold indicate new paragraphs, while smaller gaps indicate line breaks within paragraphs.

This method handles single-column text and simple layouts well, though complex newspaper-style columns would need more sophisticated grouping that first identifies column boundaries before sorting within each column. For batch processing, the pipeline creates an audit trail: a CSV with page-level status, combined text output, and preprocessed images for manual inspection when results look wrong. Having these artifacts separately made it much easier to iterate on the OCR process and diagnose problems.

AI-Powered OCR Correction

Even after careful preprocessing, Tesseract made predictable mistakes: “rn” misread as “m” (particularly painful in German names), lowercase “l” confused with capital “I”, missing or extra spaces where text was faded, and broken words at line endings. These are exactly the kinds of errors that are tedious to fix manually but easy for a human reader to recognize from context.

I realized GPT-5 could fix these errors the same way a human would—by understanding context. A human can tell that “Gernany” should be “Germany” even if the OCR only sees “Germ any.” Why couldn’t an LLM do the same?

The challenge was getting GPT-5 to fix errors without “improving” the text. My first attempts failed spectacularly.

The Prompt That Made Things Worse

My initial prompt seemed reasonable:

System: You are a helpful assistant who is an expert on the English language,

skilled in vocabulary, pronunciation, and grammar.

User: Correct any typos caused by bad OCR in this text, using common sense

reasoning, responding only with the corrected text:

GPT-5 took this as license to:

- Rewrite awkward phrasings to sound more modern

- “Fix” old-fashioned word choices

- Restructure sentences for clarity

- Add punctuation it thought was missing

The result? A CER of 1.209—worse than doing nothing. I had inadvertently asked it to rewrite the autobiography rather than preserve it.

The Prompt That Worked

The successful prompt explicitly constrains the model’s behavior. Here’s the system prompt:

You are an expert at correcting OCR errors in scanned documents.

Your task is to fix OCR mistakes while preserving the original text structure,

formatting, and meaning exactly as written.

And the user prompt:

The following text was extracted from a scanned document using OCR.

It contains OCR errors that need to be corrected.

IMPORTANT INSTRUCTIONS:

- Fix ONLY OCR errors (misspellings, character misrecognitions, punctuation mistakes)

- Preserve the EXACT original structure, line breaks, spacing, and formatting

- Do NOT rewrite, reformat, or improve the text

- Do NOT add explanations, suggestions, or commentary

- Do NOT change the writing style or voice

- Return ONLY the corrected text, nothing else

OCR text to correct:

{text}

This achieved CER 0.079—a 93% error reduction compared to the vague prompt. The difference illustrates how critical prompt design is for this use case. Without the constraints, GPT-5 was trying to be helpful in ways that destroyed the historical authenticity I was trying to preserve.

Before and After

Here’s a snippet showing typical errors and corrections:

Raw OCR:

Approached from many sides to write down my life's memories...

Of the year '48, as far as | was personally touched by them...

in the beautiful 'and of Anhalt... born to an honest Oraper

GPT-5 Corrected:

I was approached from many sides to write down my life's memories...

of the year '48, as far as I was personally touched by them...

in the beautiful land of Anhalt... born to an honest draper

GPT-5 fixed pipe characters (|) to “I”, apostrophe-and ('and) to “land”, and “Oraper” to “draper"—exactly the kinds of systematic errors that are tedious to fix manually but trivial for an LLM with proper context.

Validation with an Interactive Viewer

To catch problems I’d miss in raw output files, I built a simple Streamlit viewer that shows four views side-by-side: original image, preprocessed image, raw OCR text, and GPT-5 corrected text. This made it easy to spot when preprocessing made things worse, when OCR failed on specific regions, or when GPT-5 over-corrected something it shouldn’t have. The viewer surfaced dozens of issues I would have missed otherwise, and made the difference between “it mostly works” and “I trust this output.” Building it took a few hours but saved many more in debugging time.

Processing was ~80x slower than raw OCR, but since it ran unattended while I did other things, the extra time didn’t matter.

Key Takeaways

After this deep dive into OCR systems, here’s what I’d tell anyone tackling a similar project:

Traditional OCR is better than you think. Pytesseract delivered 91.8% accuracy on typed historical documents. The real work is preprocessing and quality assurance, not the OCR engine itself.

Preprocessing matters enormously. Grayscale conversion, noise reduction, and thresholding aren’t exciting, but they’re essential for good results on aged documents.

LLM correction is powerful but fragile. GPT-5 can fix OCR errors beautifully with a strict prompt, but a vague prompt will make things worse. Test extensively before batch processing.

Measure objectively. Create ground truth for a few pages and calculate CER. Subjective quality assessment is misleading.

Build a viewer early. A simple validation tool will save hours of debugging and catch problems you’d miss in raw output files.

Keep intermediate artifacts. Save preprocessed images and per-stage outputs. You’ll want them when something goes wrong.

Accept that some human review is necessary. Even at ~92% accuracy, I still read the final output and corrected remaining issues. Perfect automation isn’t the goal—making the task manageable is.

Resources

The full implementation with detailed code, a Streamlit viewer, benchmark tools, and sample data is available on my website: ranton.org/g/ocr_guide. For academic background, see Smith, R.W. (2007). An Overview of the Tesseract OCR Engine. Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), 2, 629-633 and the Tesseract documentation.

I built this system to preserve my great-great-grandfather’s autobiography, but I hope it helps others preserve their own family histories. Too many historical documents sit in closets, slowly deteriorating, because digitization seems too complex or expensive. It doesn’t have to be—with open-source tools and modern AI, you can build a system that delivers production-quality results without specialized equipment or expertise.

Thank you to my late uncle for preserving August Anton’s autobiography and sharing it with the family. This project exists because he saw value in keeping family history alive.

If you enjoyed this, I write The Cognitive Engineering Newsletter—short essays on attention, learning systems, and AI agents. ranton.org/newsletter